Bill Gates and climatist collaborators are taking taxpayers and consumers on trillion-dollar rides

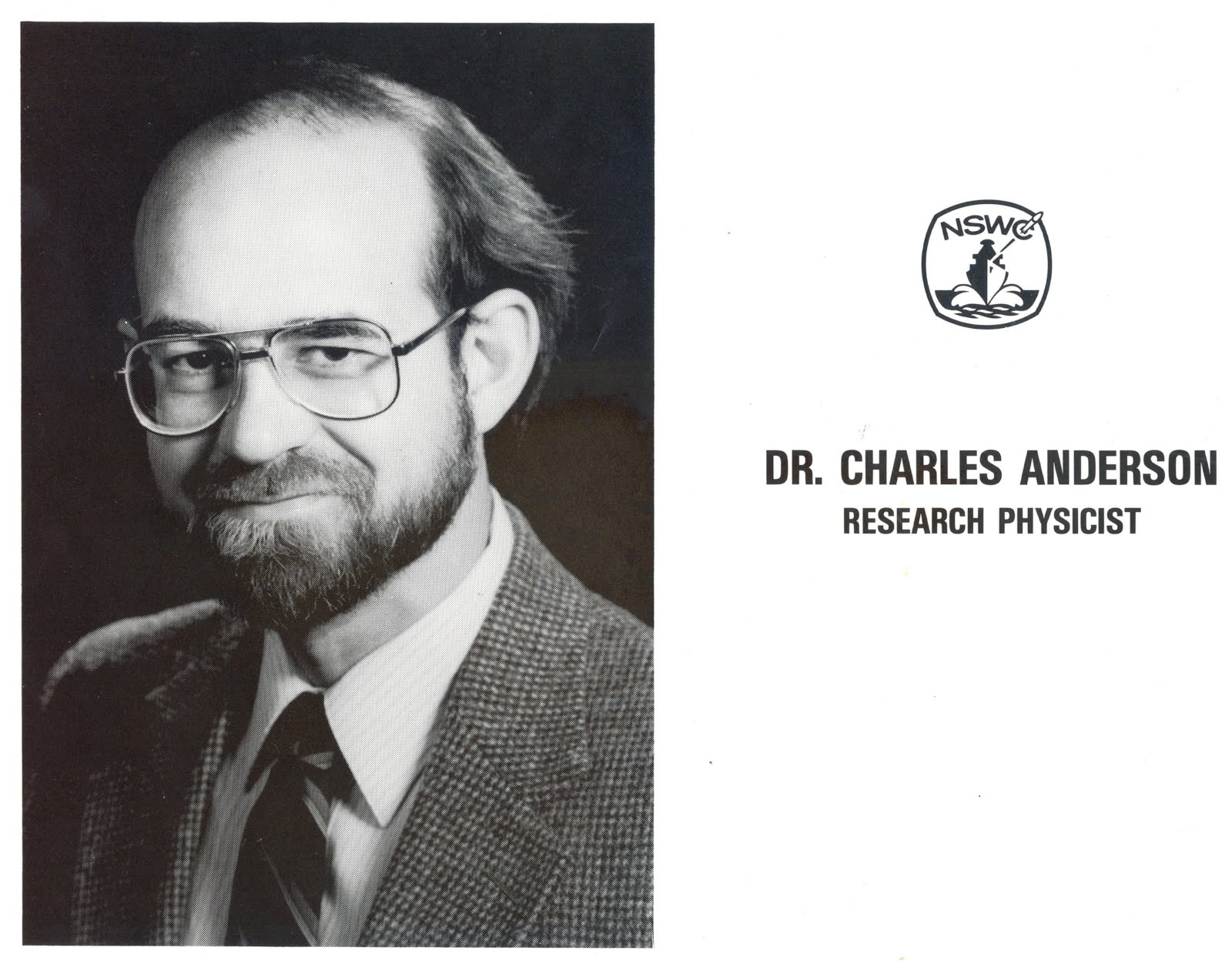

Paul Driessen

Grifters have long fascinated us. Operating outside accepted moral standards, they excel at persuading their “marks” to hand valuables over willingly. If they ever represented a “distinctly American ethos,” they’ve been supplanted by con artists seeking bank accounts for funds abandoned by Nigerian princes.

Their artful dodging is epitomized by Frank Abagnale daring the FBI to “catch me if you can,” Anna Delvey inventing Anna Sorokin, Redford and Newman masterminding their famous Sting, and dirty, rotten scoundrels like Steve Martin, Michael Caine and Glenn Headly.

However, they were all pikers compared to the billion-dollar stratagems being carried off by Climate Armageddon grifters like Bill Gates, Al Gore, Elon Musk and Biden Climate Envoy John Kerry.

Their long cons are not only unprecedented in size and complexity. They represent the greatest wealth transfer in history, from poor and middle class families to the wealthiest on Earth. Most important, the plundering has been legalized by laws, regulations, treaties and executive orders, often implemented at the behest of the schemers and their lobbyists.

(You have to wonder how Mark Twain would update his suggestion that “there is no distinctly native American criminal class except Congress.”)

They and their politician, activist, scientist, corporate and media allies profit mightily, but legally, if not unethically, from foundation grants, government payouts and subsidies, and taxpayer and consumer payments based on claims that Earth faces manmade climate cataclysms. That most of us are willingly giving money to mandated “renewable energy” schemes and other corrupt practices is questionable.

Microsoft co-founder Gates’ estimated 2022 post-divorce net worth of some $130 billion enables him to donate hundreds of millions to social, health, environmental and corporate media causes. That usually shields him from tough questions.

But BBC media editor Amol Rajan recently asked Mr. Gates to answer charges that he’s “a hypocrite,” for claiming to be “a climate change campaigner” while traveling the world on his luxurious private jets – often to confabs where global elites discuss how we commoners can enjoy simpler, fossil-fuel-free lives: what size our homes can be, how and how much we can heat them, what foods we can eat and how we can cook them, what cars we can drive, whether we can fly anywhere on vacation, what our kids will learn in school, and more.

Caught flatfooted, Gates defended his use of fuel-guzzling, carbon-spewing jetliners by claiming he purchases “carbon credits” to offset his profligate energy consumption. He also said he visits Africa and Asia to learn about farming and malaria, and spends billions on “climate innovations.”

Indeed, Gates’ book “How to Avoid a Climate Disaster: The solutions we have and the breakthroughs we need” calls for replacing beef with synthetic meat. Cattle emit methane, a greenhouse gas (00.00019% of Earth’s atmosphere) – so people should eat fake meat processed from vegetable oil, veggies and insects.

You may say, That’s disgusting. But Mr. Gates will profit mightily if his “recommendation” is adopted. He’s a major investor in farmland and the imitation meat company Impossible Foods, as is Mr. Gore.

How cool! Wealthy elites can save the world and get richer at the same time!

Beyond Meat’s stock may be down more than 75% from its one-time high, but investors will likely bring in lots more cash via new “climate-saving” diktats, while consumers are left holding bags of rotting bug and lab-grown burgers.

Carbon offsets? In the real world they’re part of the problem, not the solution. They don’t help Main Street; they too help rich Climate Armageddon Club members become wealthier.

Gates Foundation grants could prevent extensive African misery, brain damage and death from malaria, by spotting disease outbreaks and eradicating Anopheles mosquito infestations – today. But it’s spending millions trying to engineer plasmodium-resistant mosquitoes, which may pay off a decade from now.

Meanwhile, Elon Musk’s Tesla Inc. continues pocketing billions selling and trading carbon credits. In fact, between 2015 and 2020, the company received $1.3 billion from selling credits to other companies – more than twice what it earned from automotive sales. Times sure have changed since manufacturing tycoons got rich selling products, instead of hawking climate indulgences.

Musk also loves flying in private jets. Last summer, he even took a 9-minute, 55-mile flight from San Francisco to San Jose, instead of driving a Tesla. Wags might say that goes well with the way he and others have made a science of lobbying government agencies to subsidize fire-prone electric cars.

It’s all to protect the environment, of course – which is why Gore, Gates, Musk and Kerry think they’re entitled to travel by private jet and limousine. We’re also supposed to ignore how their cars and lifestyles are based on metals extracted and processed with African child labor and lakes of toxic chemicals.

Since Al Gore left the vice president’s office, he’s hauled in some $330 million railing about “rain bombs” and “boiling oceans,” and shilling for government and corporate “investments” in “green energy” that’s also reliant on supply chains running through Africa and China.

Never forget this fundamental rule: Wind and sunshine are clean, renewable and sustainable. However, harnessing these unreliable, weather-dependent energy sources to power modern economies requires millions of tons of metals and minerals extracted from billions of tons of ores, mostly using dirty, polluting processes in countries that are conveniently out of sight and mind.

In short, nothing about “renewable energy” is clean, renewable, sustainable, fair or equitable.

Moreover, the “climate crisis” is based on computer models that predict hurricane, tornado, flood, drought, sea level rise and other disasters vastly greater than the world is actually experiencing. The models also ignore five great ice ages and interglacial periods, the Medieval Warm Period and Little Ice Age, the Anasazi and Mayan droughts, and other inconvenient climate truths.

Topping it off, China, Russia and India are burning cheap coal to industrialize, lift people out of poverty, and leave climate-obsessed Western nations in the economic and military dust. Even if the West went totally Net Zero, it wouldn’t reduce atmospheric greenhouse gases even one part per million.

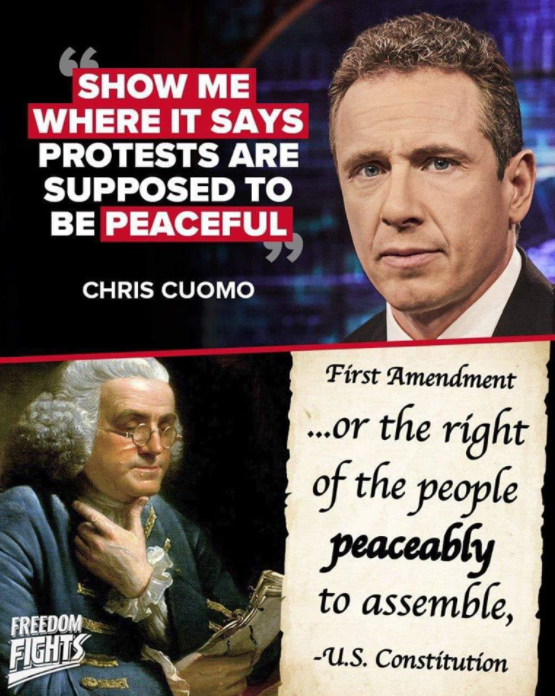

The climate change movement’s deceptions and contradictions seem to have no bounds – and know no apparent limits to how much loot they can rake in by lobbying federal, state and local governments, banks and financial institutions; waging media warfare; and engaging in political science with similarly minded legislators and regulators who control climate and energy laws, mandates, grants and subsidies.

What about ESG, financial disclosure, SVB, Credit Suisse, fiduciary responsibility, and accountability?

How can the general public be so oblivious to all of this?

FTX founder and alleged fraudster Sam Bankman-Fried revealed the secret. He avoided media and regulator scrutiny by donating to influential media outlets, the way Bill Gates does. That garners favorable press and social media – which also ignore, cancel and deplatform critics and skeptics.

Fortunately, gutsy interrogators like Rajan are discovering and publicizing what most of the bought-and-paid-for “journalist classes” still won’t. This helps more people see behind the curtain and find the self-interest, self-dealing and pseudo-science that create the scary climate crisis monsters.

Climate Armageddon Club games are costing us trillions of dollars, in the name of saving people and planet. Hopefully, more real journalists, troves of Twitter emails (this time kudos to Mr. Musk!) and congressional investigations will save taxpayers and families from additional costly, destructive policies.

Paul Driessen is senior policy advisor for the Committee For A Constructive Tomorrow (www.CFACT.org) and author of books and articles on energy, climate change, environmental policy and human rights.